CLASS: Contrastive Learning via Action Sequence Supervision for Robot Manipulation

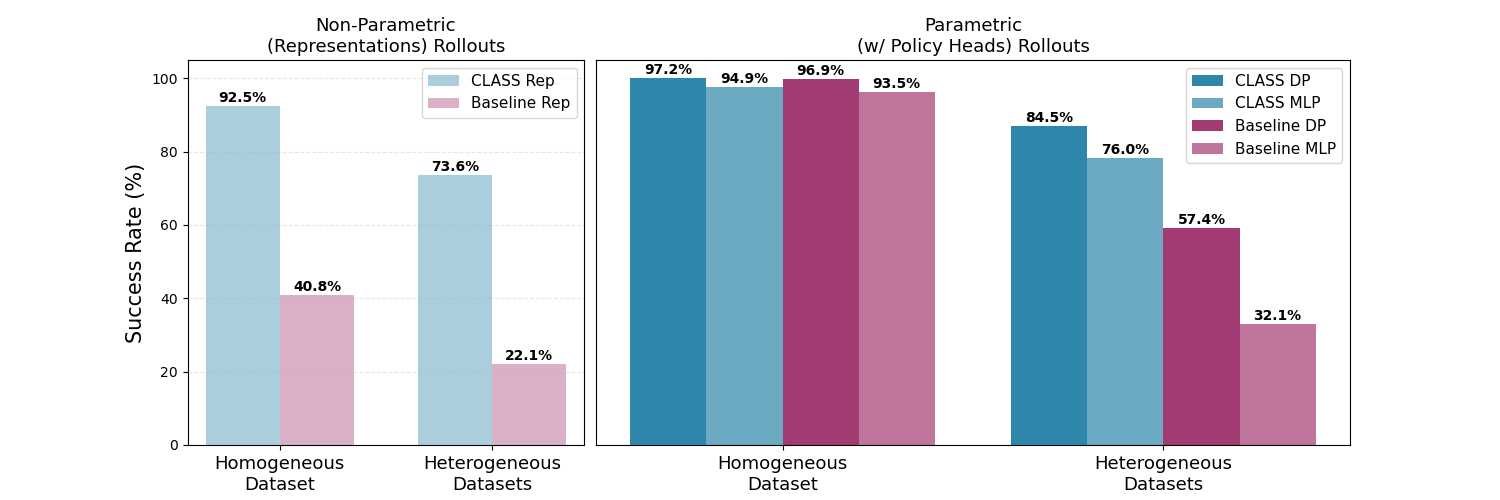

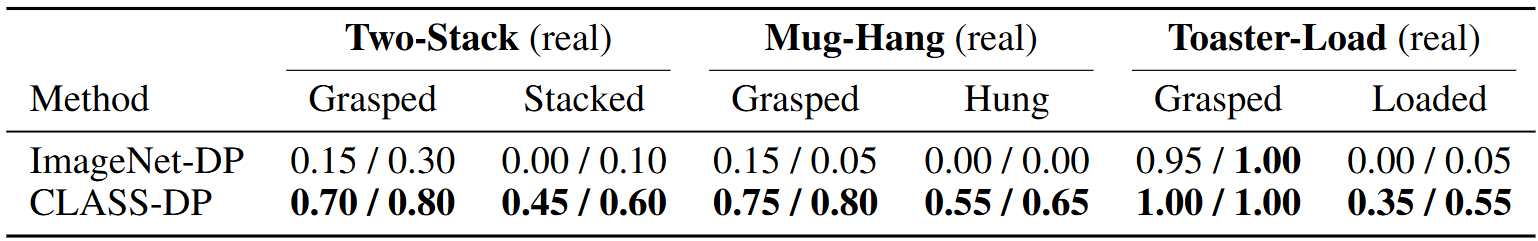

Recent advances in Behavior Cloning (BC) have led to strong performance in robotic manipulation, driven by expressive models, sequence modeling of actions, and large-scale demonstration data. However, BC faces significant challenges when applied to heterogeneous datasets, such as visual shift with different camera poses or object appearances, where performance degrades despite the benefits of learning at scale. This stems from BC’s tendency to overfit individual demonstrations rather than capture shared structure, limiting generalization. To address this, we introduce Contrastive Learning via Action Sequence Supervision (CLASS), a method for learning behavioral representations from demonstrations using supervised contrastive learning. CLASS leverages weak supervision from similar action sequences identified via Dynamic Time Warping (DTW) and opti- mizes a soft InfoNCE loss with similarity-weighted positive pairs. We evaluate CLASS on 5 simulation benchmarks and 3 real-world tasks to achieve competitive results using retrieval-based control with representations only. Most notably, for downstream policy learning under significant visual shifts, Diffusion Policy with CLASS pre-training achieves an average success rate of 75%, while all other baseline methods fail to perform competitively.

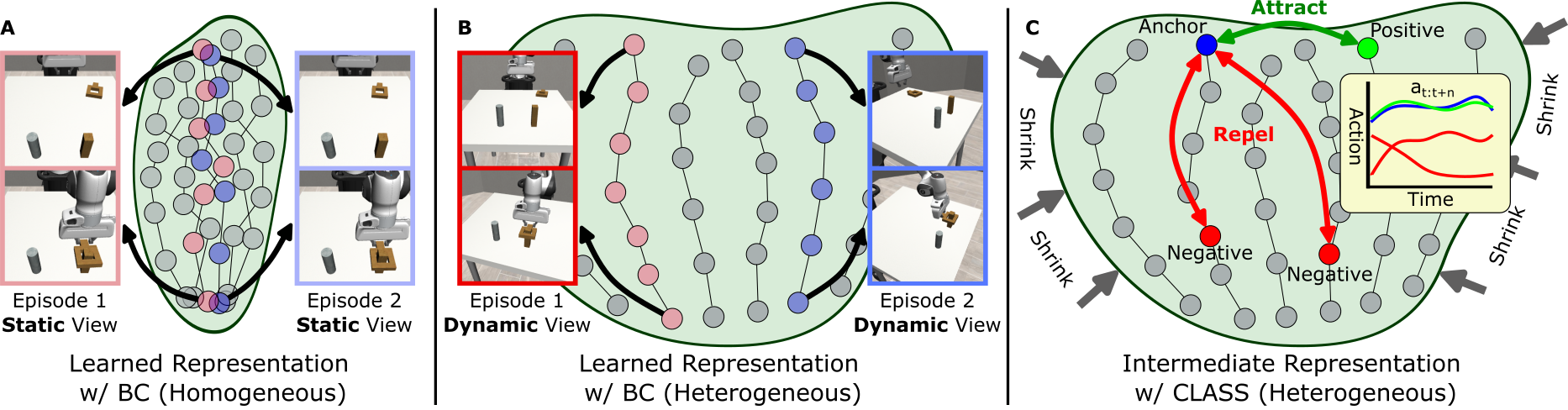

Comparison between Behavior Cloning (BC) and Contrastive Learning via Action Sequence Supervision (CLASS). (A) With homogeneous demonstrations with consistent visual conditions, BC learns a compact representation with high transferability. (B) With heterogeneous demonstrations such as varying viewpoints, BC overfits to individual state-action pairs and generalizes poorly. (C) CLASS addresses this by attracting states with similar action sequences and repelling those with dissimilar ones, with a soft supervised contrastive learning objective to learn more robust and composable representations.

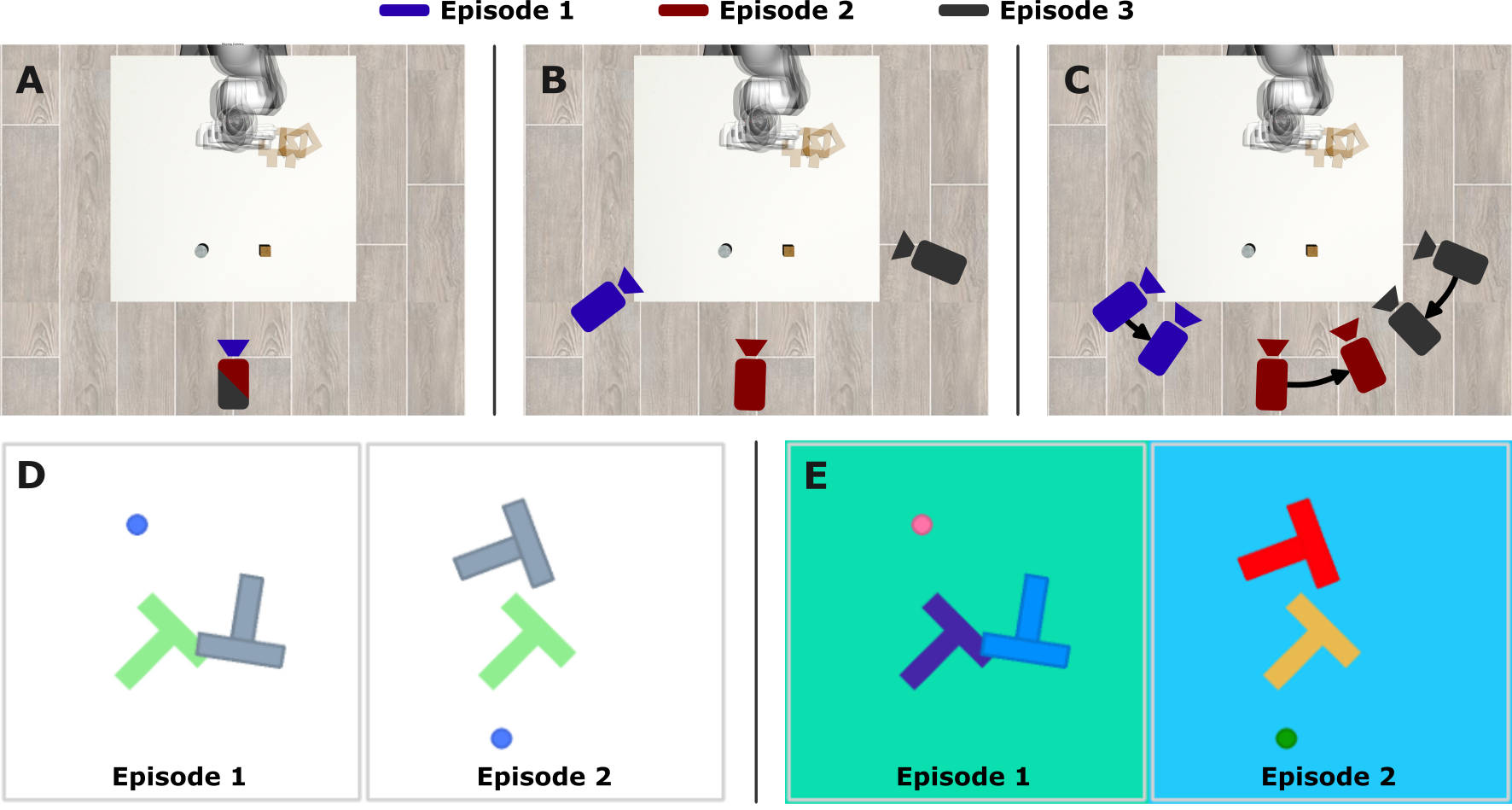

Homogeneous/Heterogeneous data collection setups. (A) Fixed camera (Fixed-Cam), commonly used in conventional behavior cloning pipelines. (B) Random static camera (Rand-Cam), where the camera pose is randomly sampled at the start of each episode but remains fixed during the episode. (C) Dynamic camera (Dyn-Cam), where a randomly initialized camera moves during the episode with a random direction while maintaining a consistent look-at target. (D) Fixed object color (Fixed-Color), commonly assumed in vision-based behavior cloning tasks. (E) Random object color (Rand-Color), where the color of the objects is randomly varied in each demonstration.

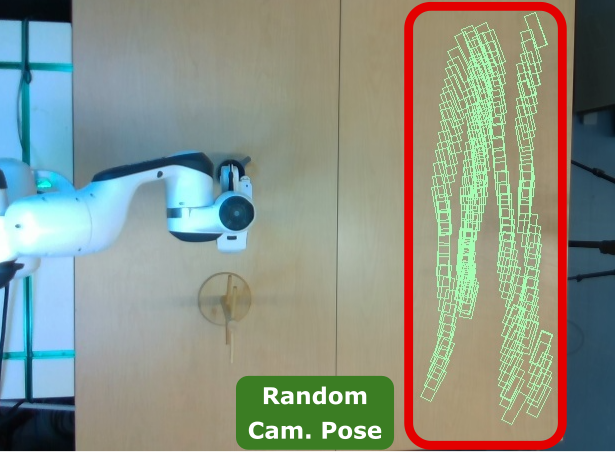

Visualization of camera poses for Mug-Hang

Individual camera positions from

25 random episodes for Mug-Hang

@inproceedings{lee2025class,

title={CLASS: Contrastive Learning via Action Sequence Supervision for Robot Manipulation},

author={Lee, Sung-Wook and Kang, Xuhui and Yang, Brandon and Kuo, Yen-Ling},

booktitle={Conference on Robot Learning (CoRL)},

year={2025},

}